Member-only story

Fourier Transform for Audio in Python

As part of my research into AIs and recreating biological aspects through python code I’ve been stumped (or challenged) by the signal processing parts, at the time of this writing I am working on recreating auditory receptors and this is where our story begins…

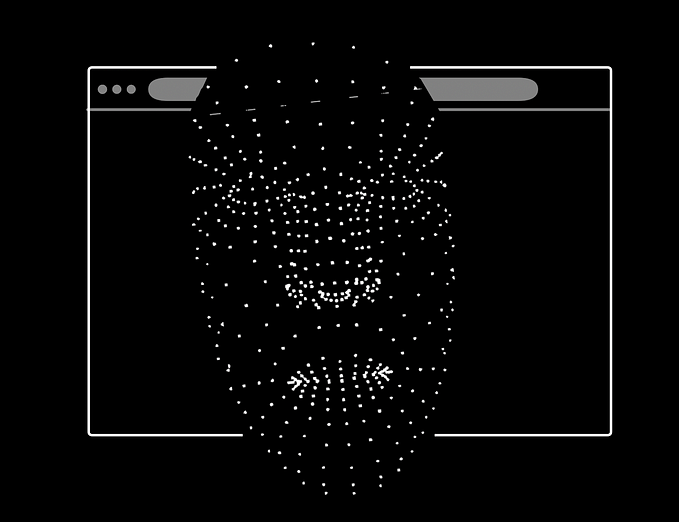

You see inside your ear the cochlea perceives sound frequencies in an orderly manner, if you’ve ever seen a graphical EQ ( or an Audio Spectrum Analyzer ), the concept is fairly similar:

This is a rather busy illustration, but then again there's a lot of information condensed here : On the left you have a Frequency Spectrum Analyzer ( or commonly referred as a graphic EQ ) and on the right your ear. The important comparison here is that inside your ear you perceive sound in discrete regions that loosely match specific sound frequencies and their intensity can neatly be represented via the vertical blocks.I've also added some units: decibels (dB - relative unit) for volume or loudness and Hertz (Hz - cycles per second) along with some examples so you can get an idea of what specific units match to in the real world.

For a more detailed look at the biology of hearing check this other post:

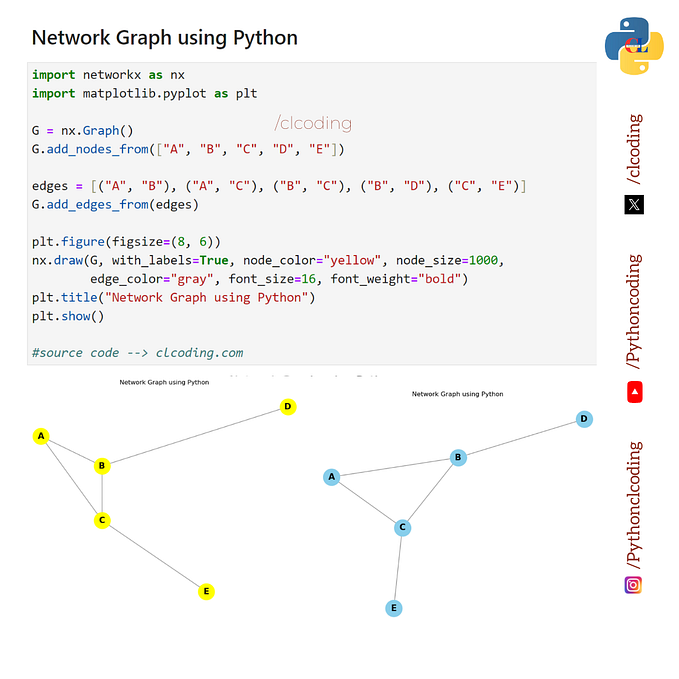

In order to build an Audio Spectrum Analyzer ( ASA ) we would need a Python Library that spits out frequency levels through time straight from your microphone; Librosa does exactly this ( and many more audio related things ) but I had performance and other issues, so I opted to use a lower level library (PyAudio) and build one myself since my end project also requires custom graphics, for now though here’s a loose specification: